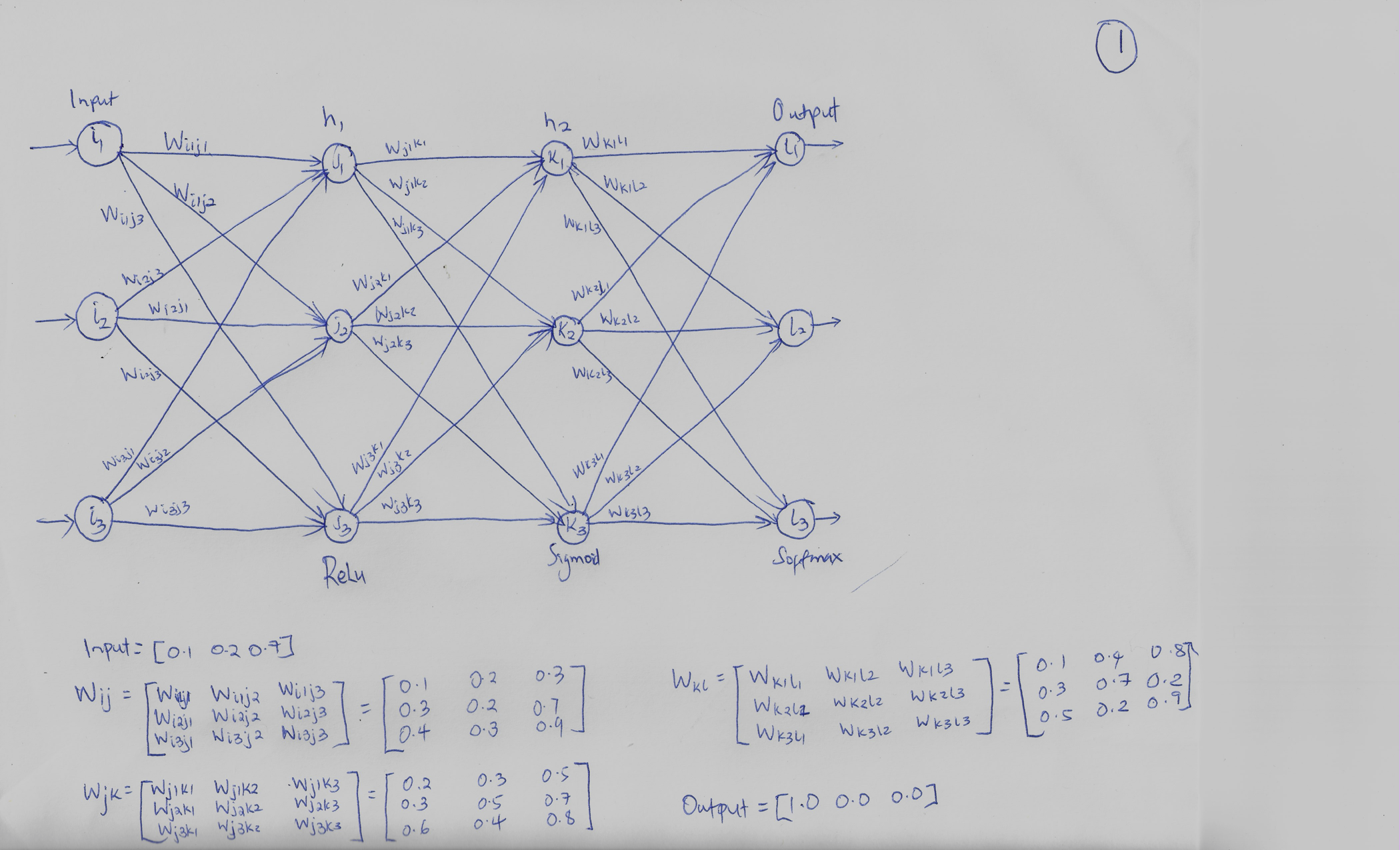

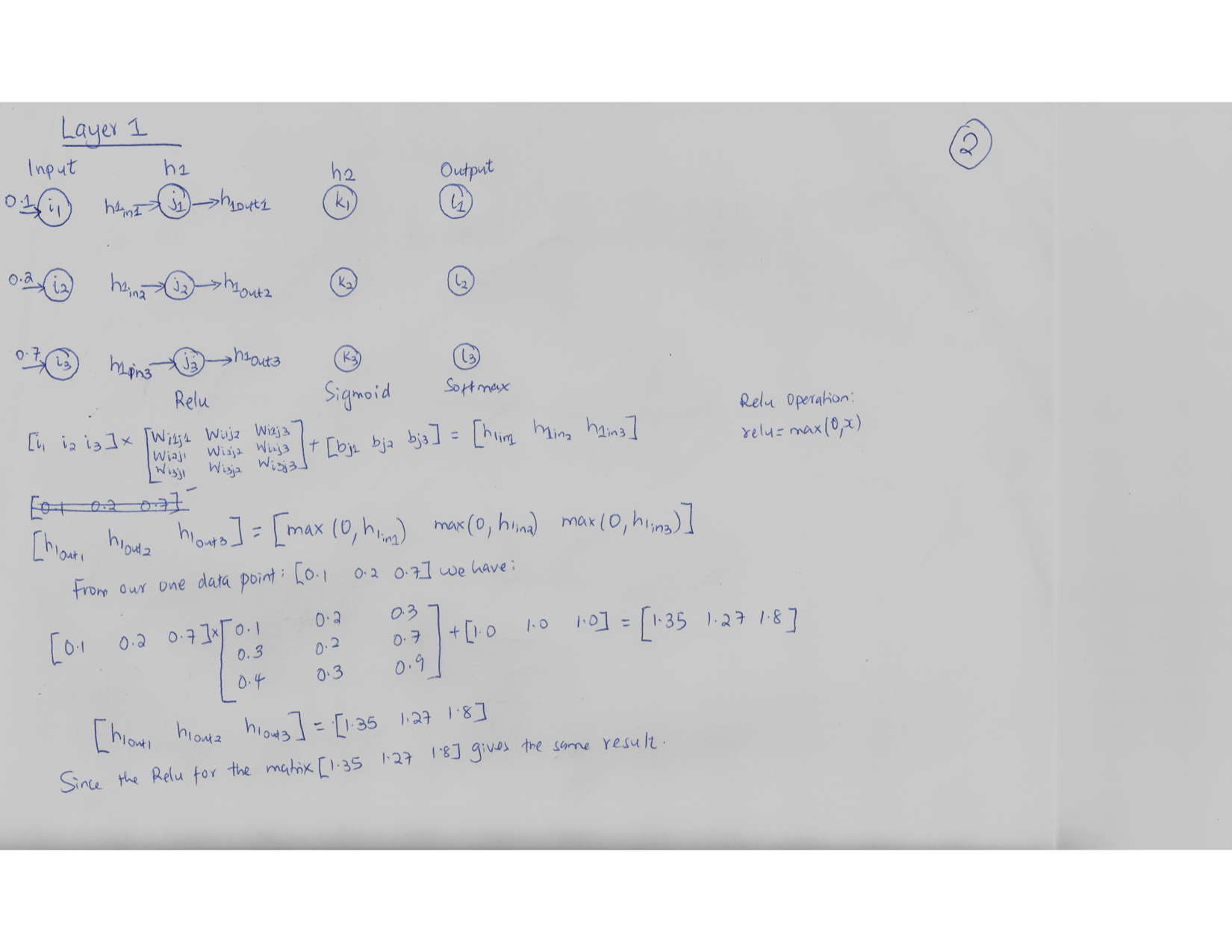

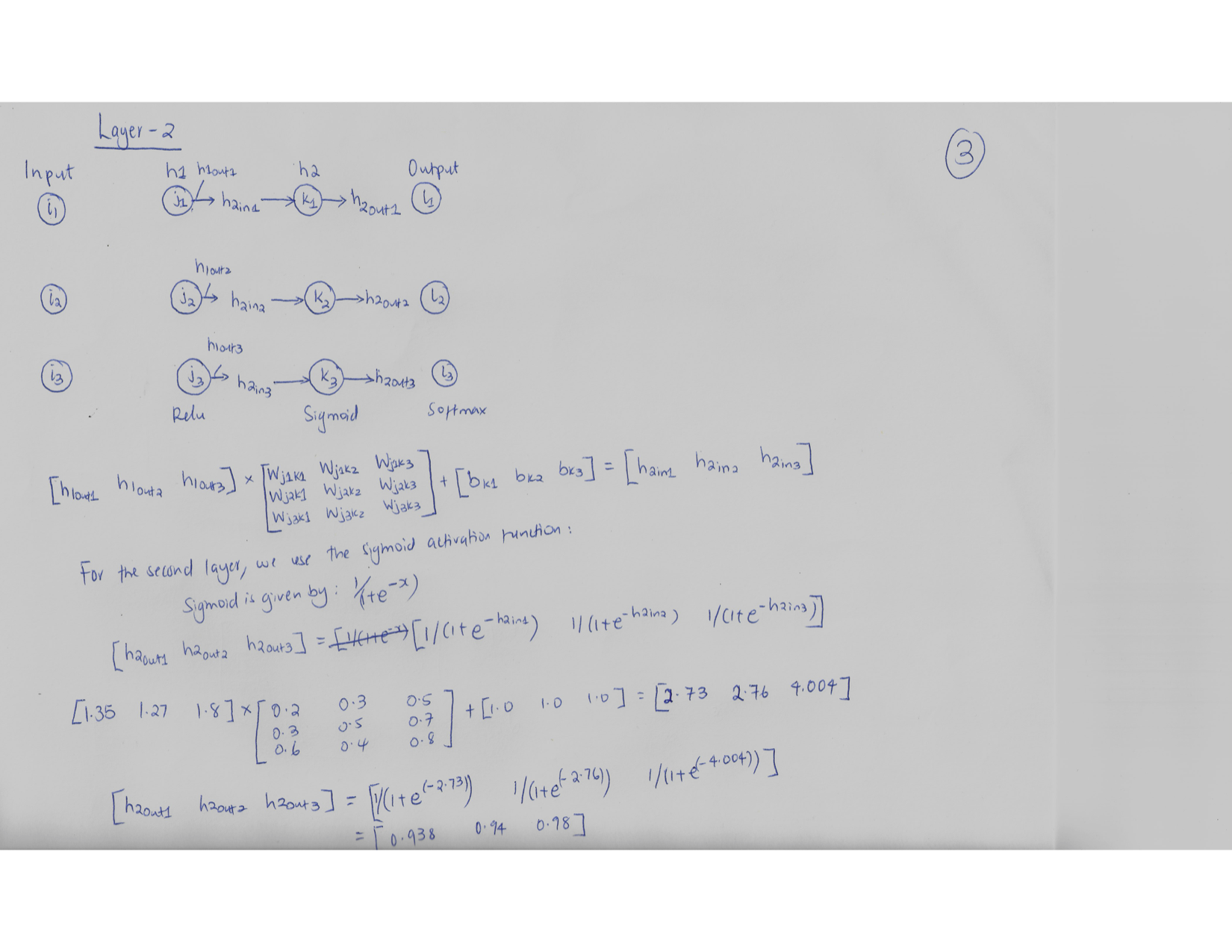

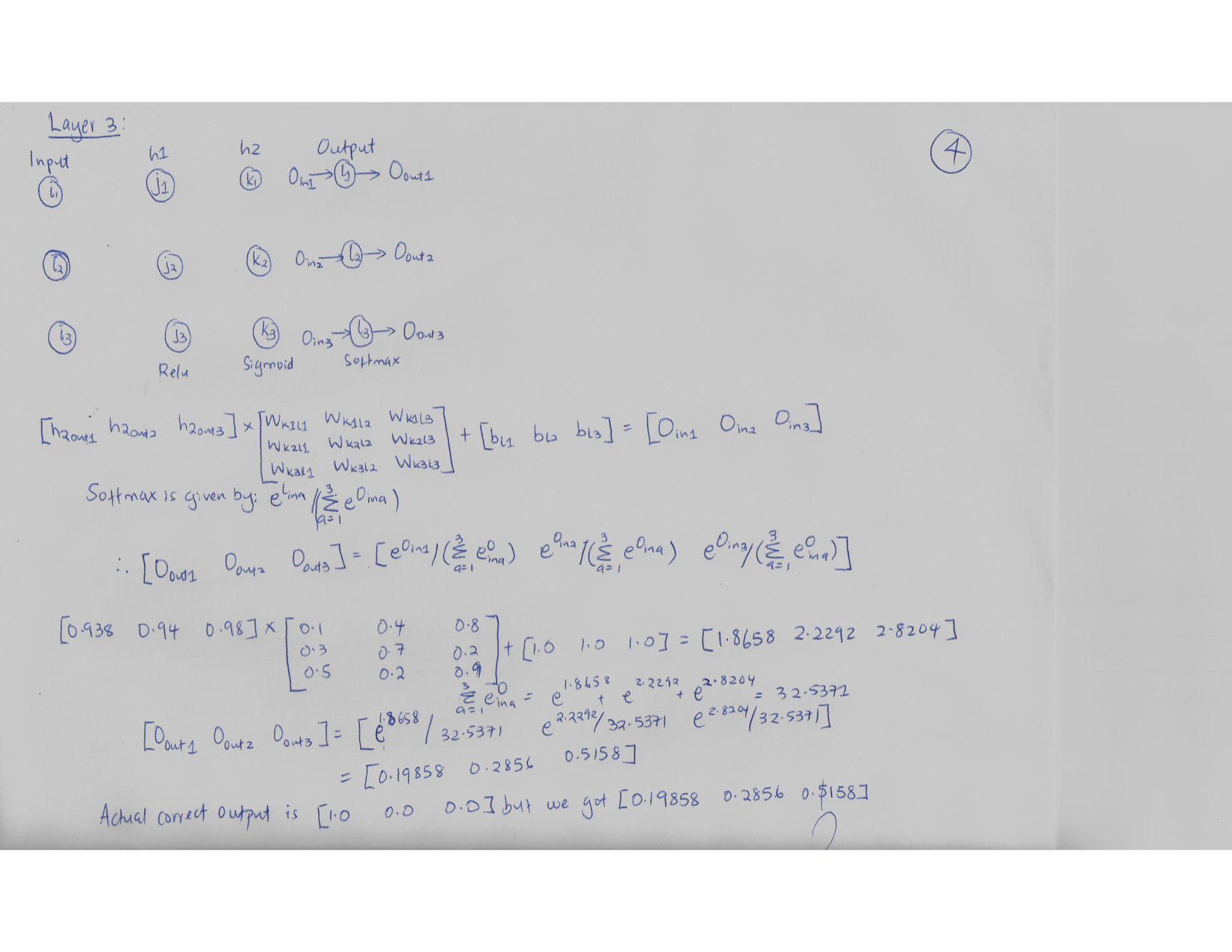

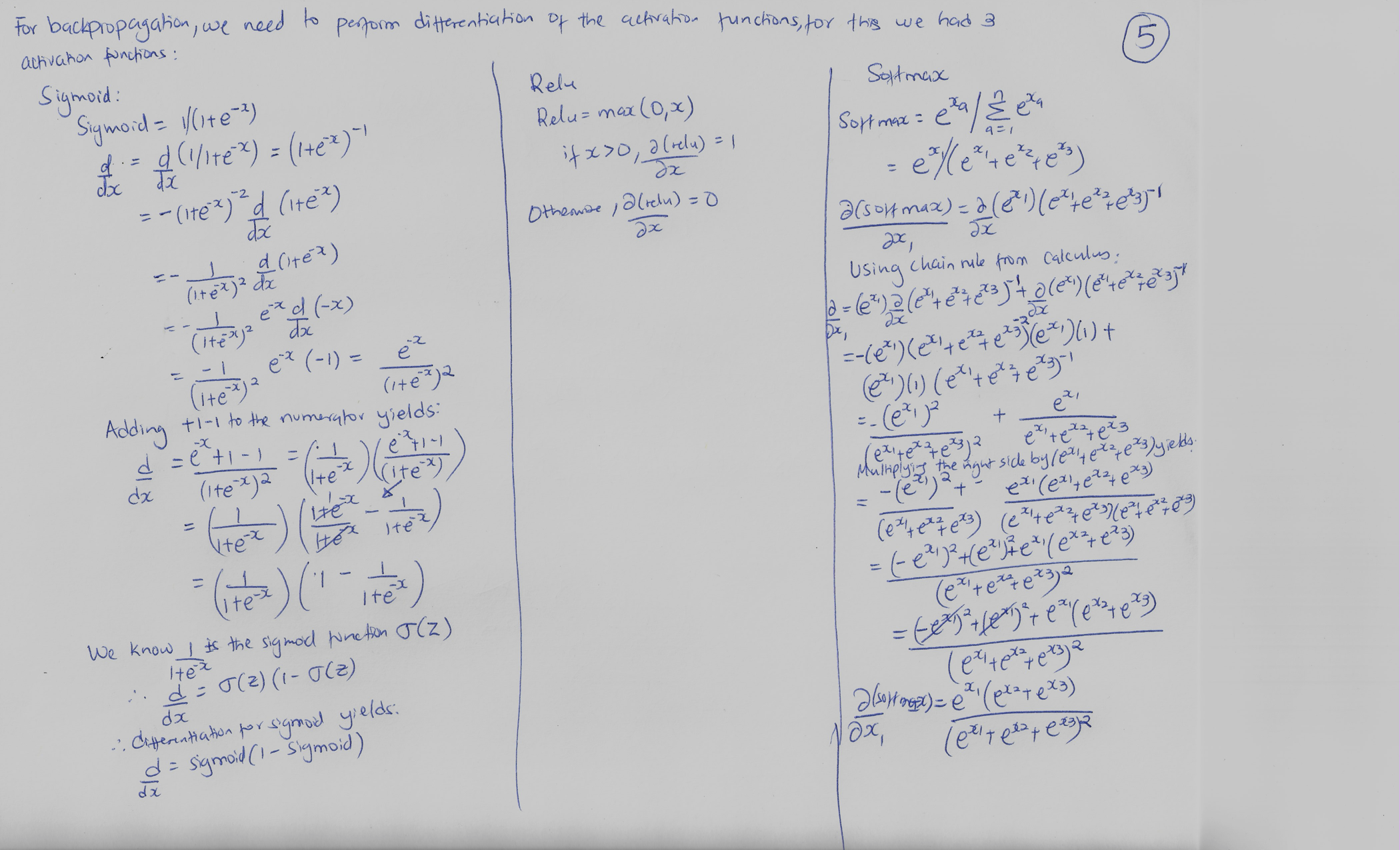

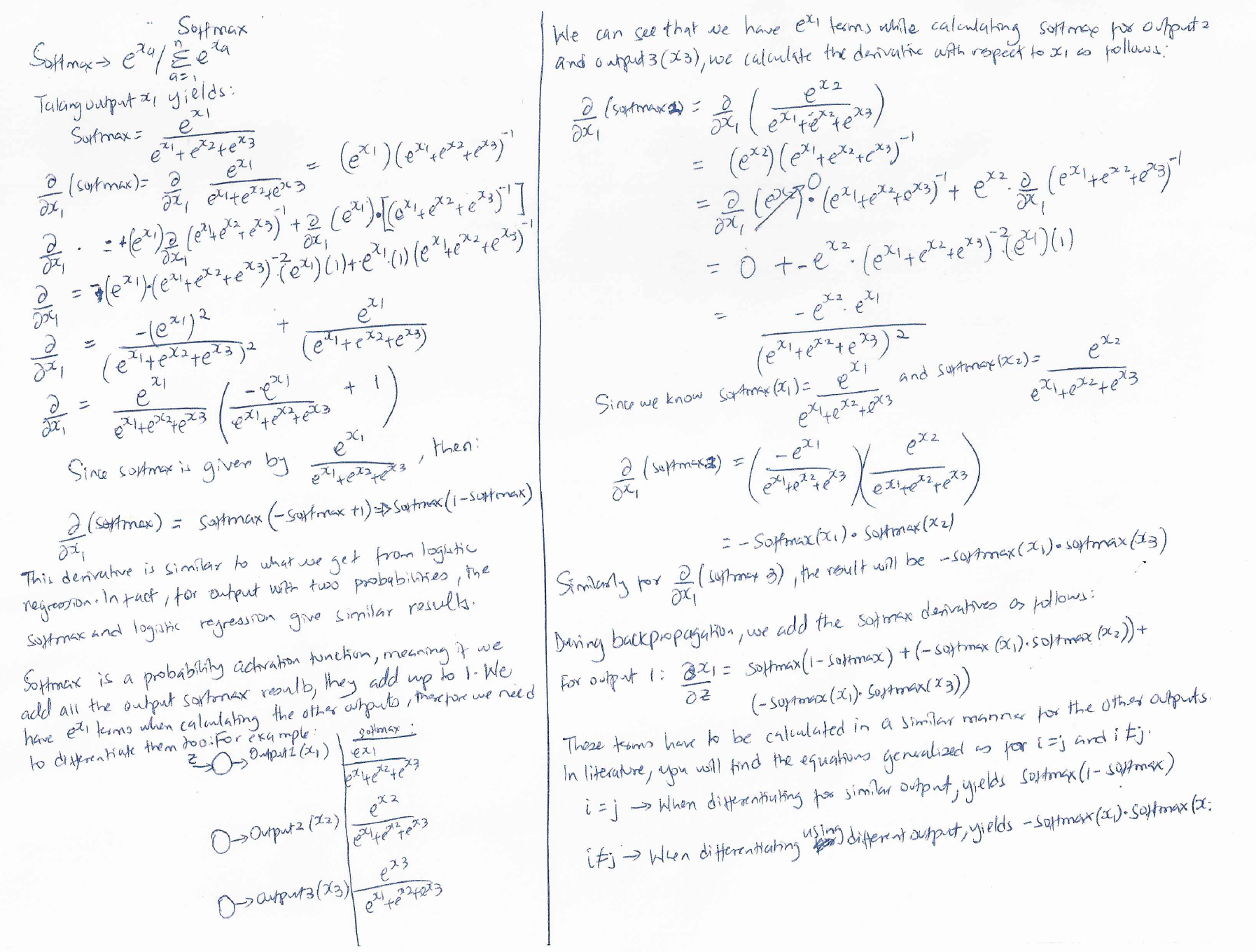

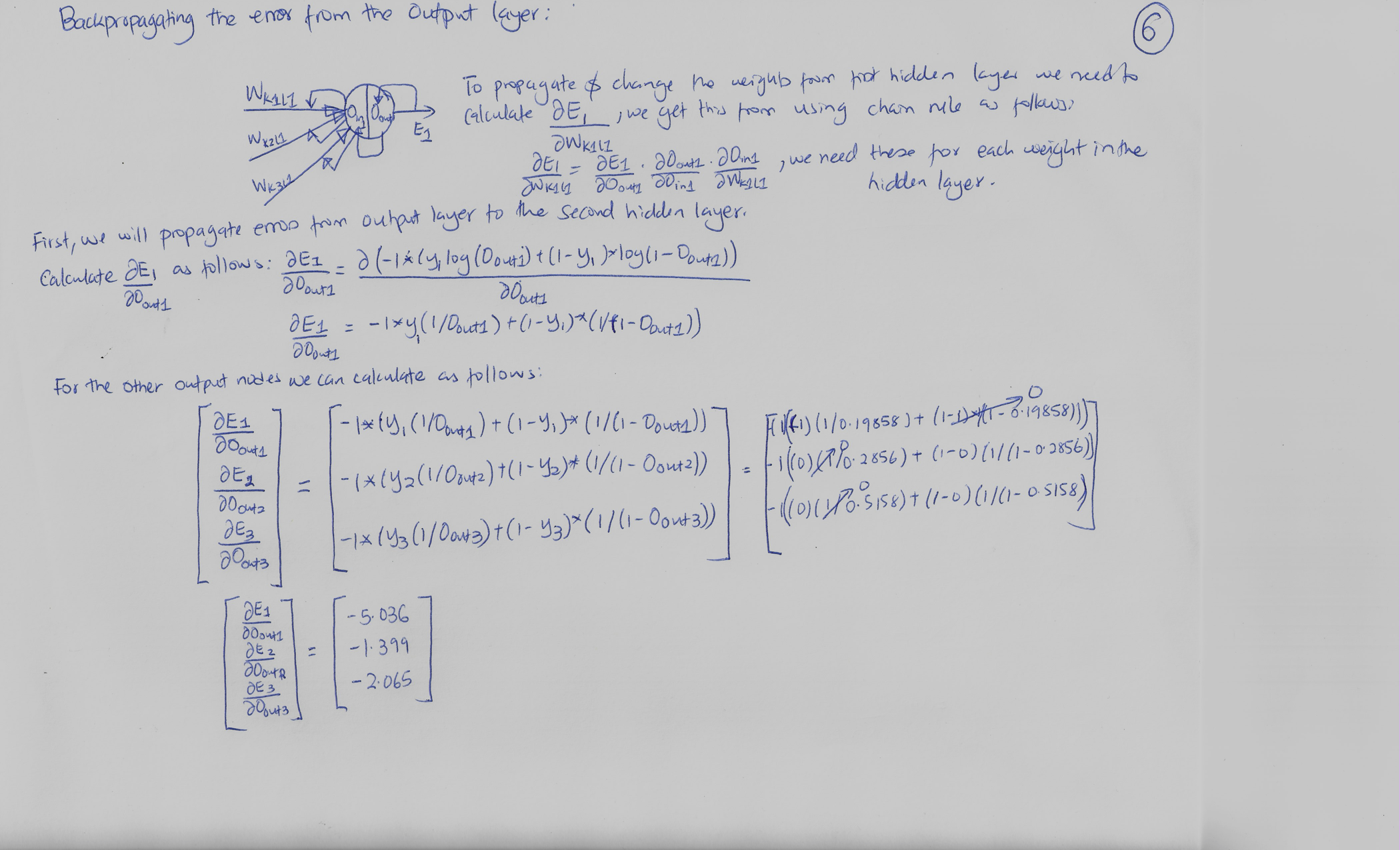

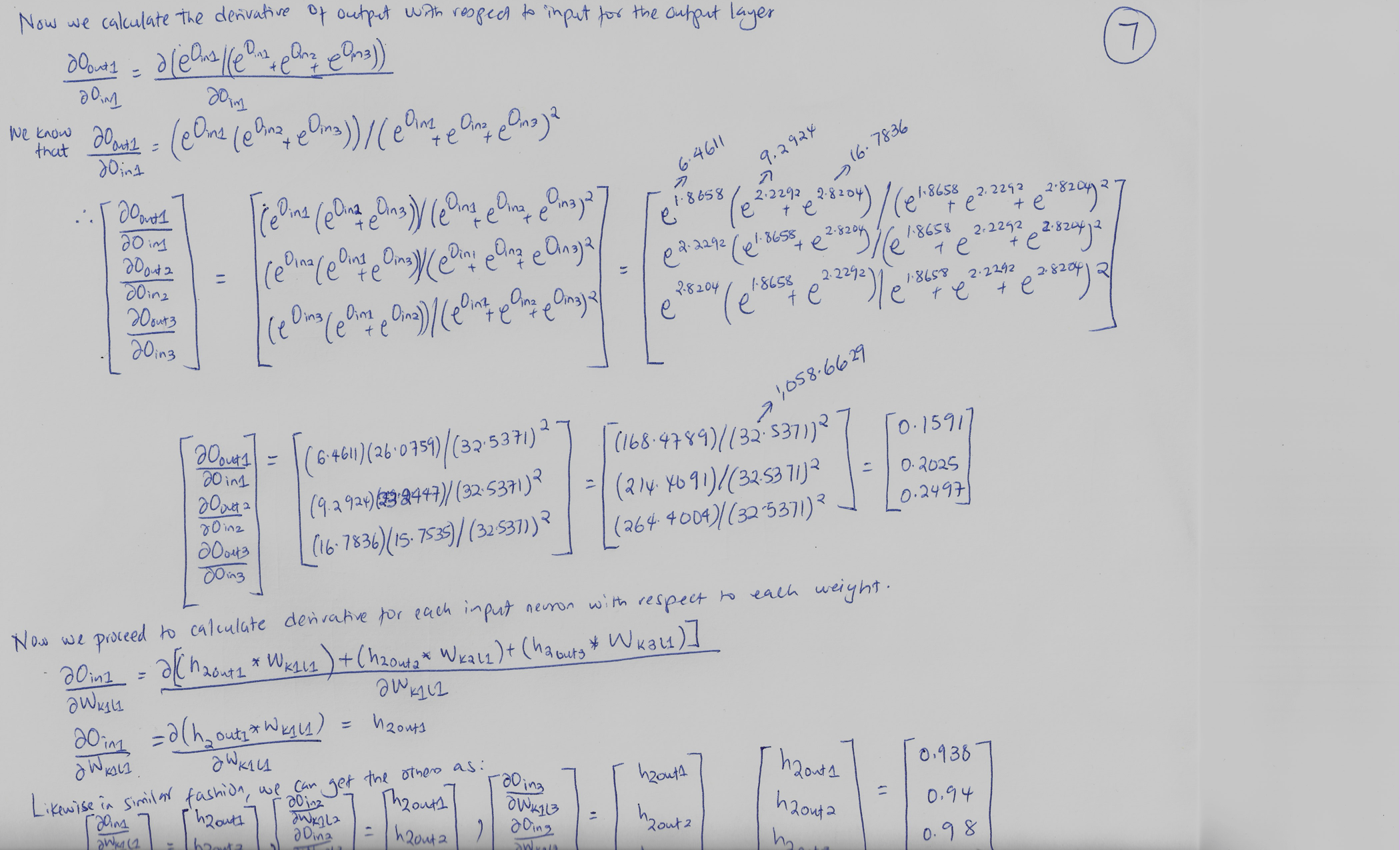

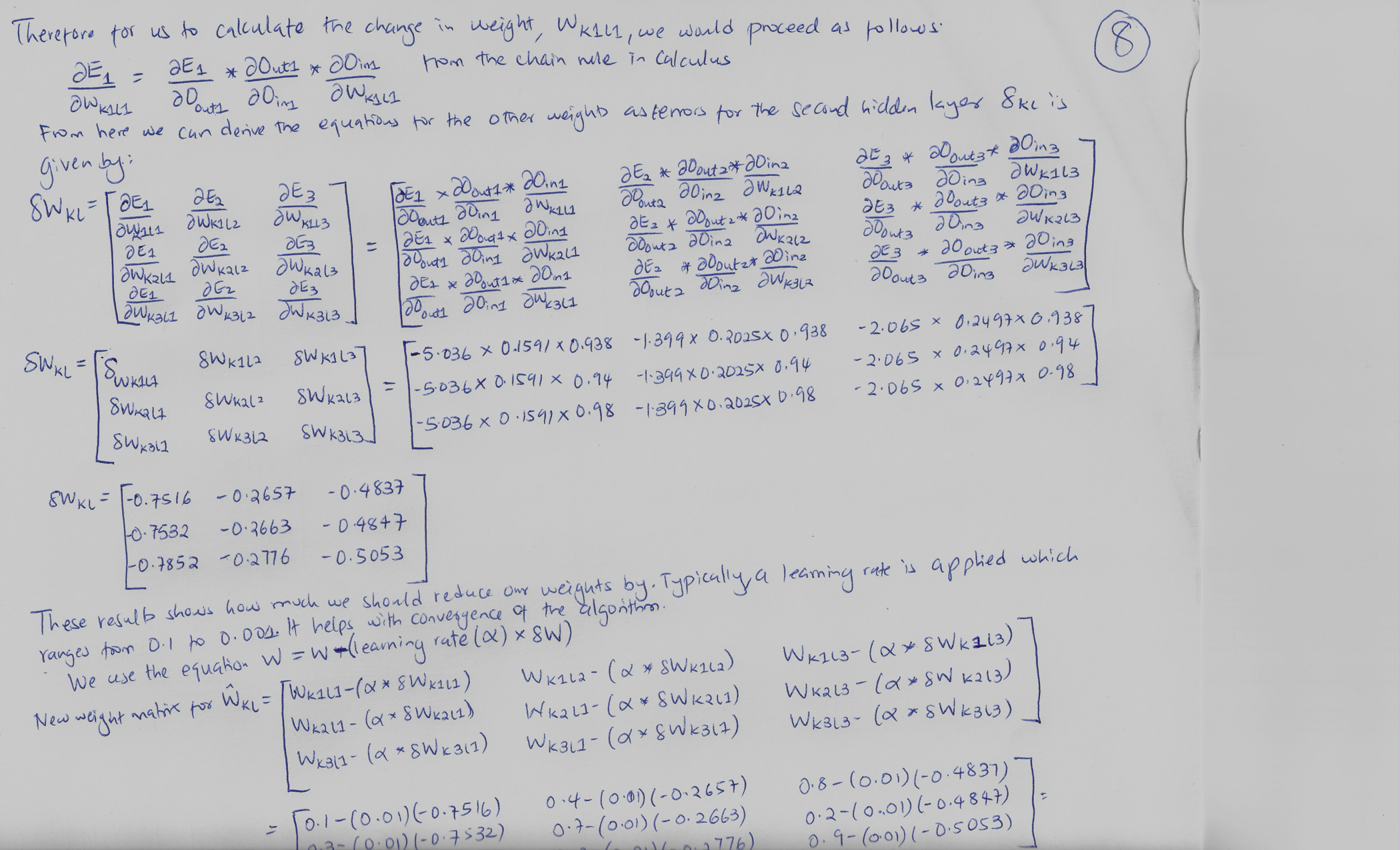

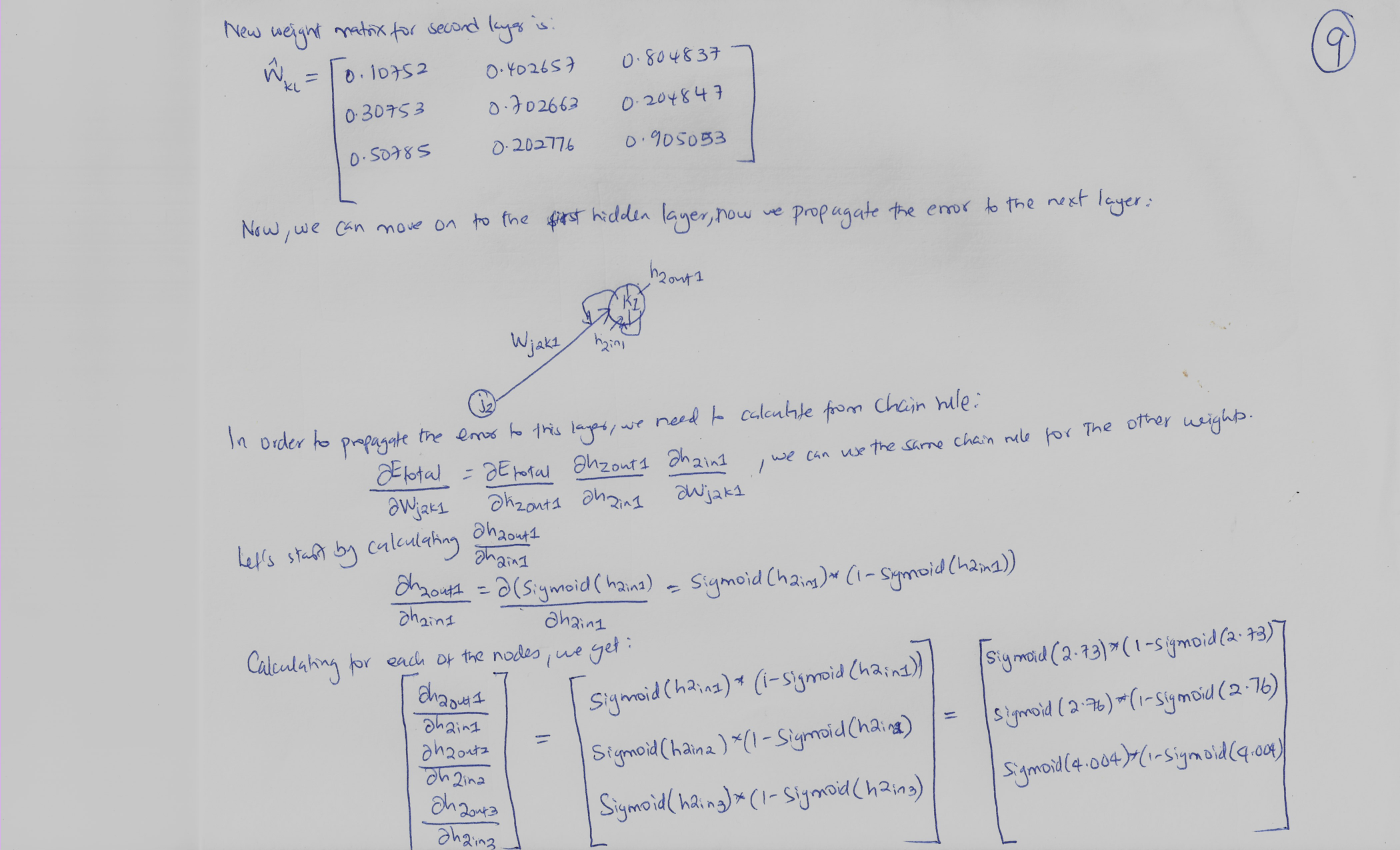

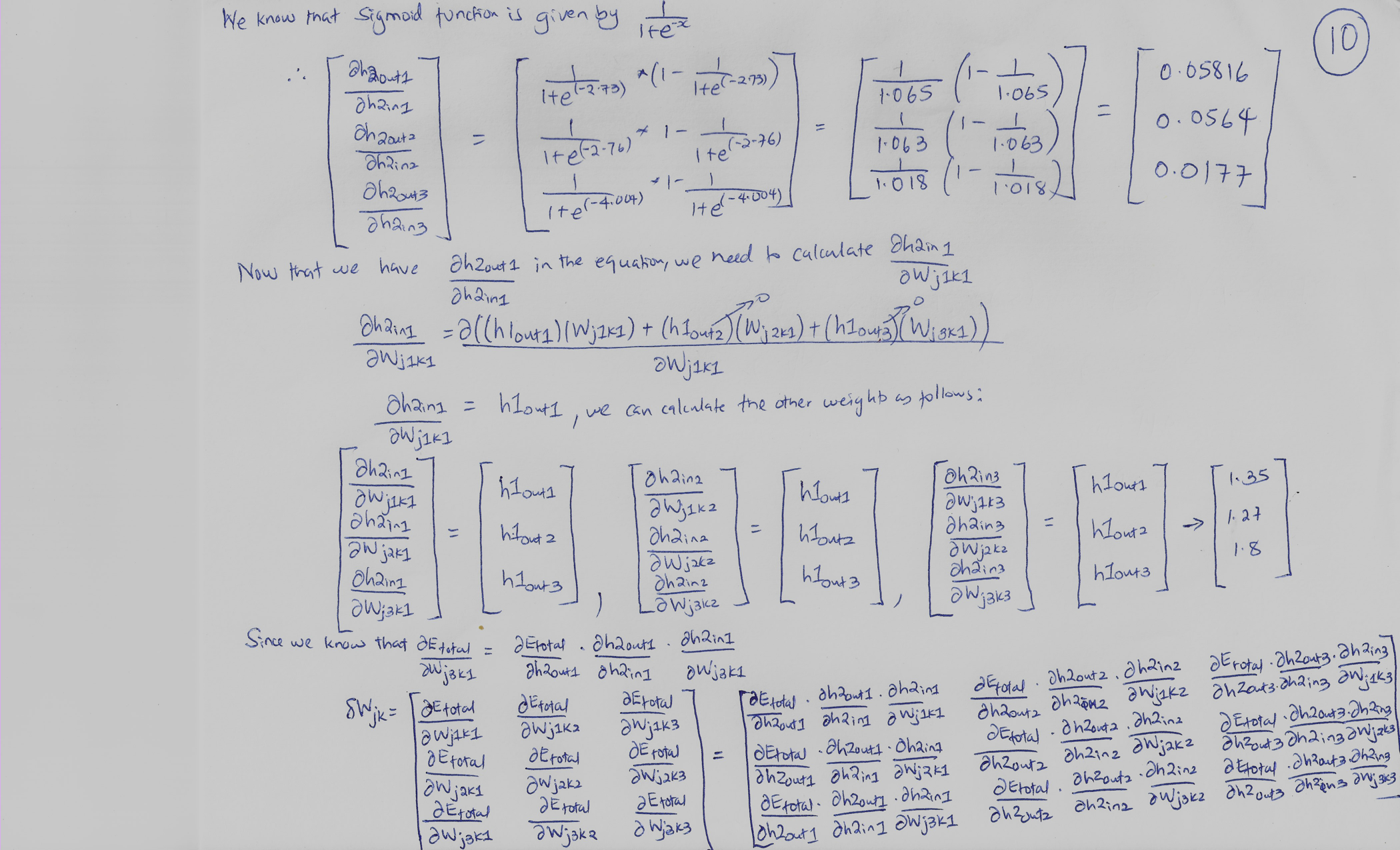

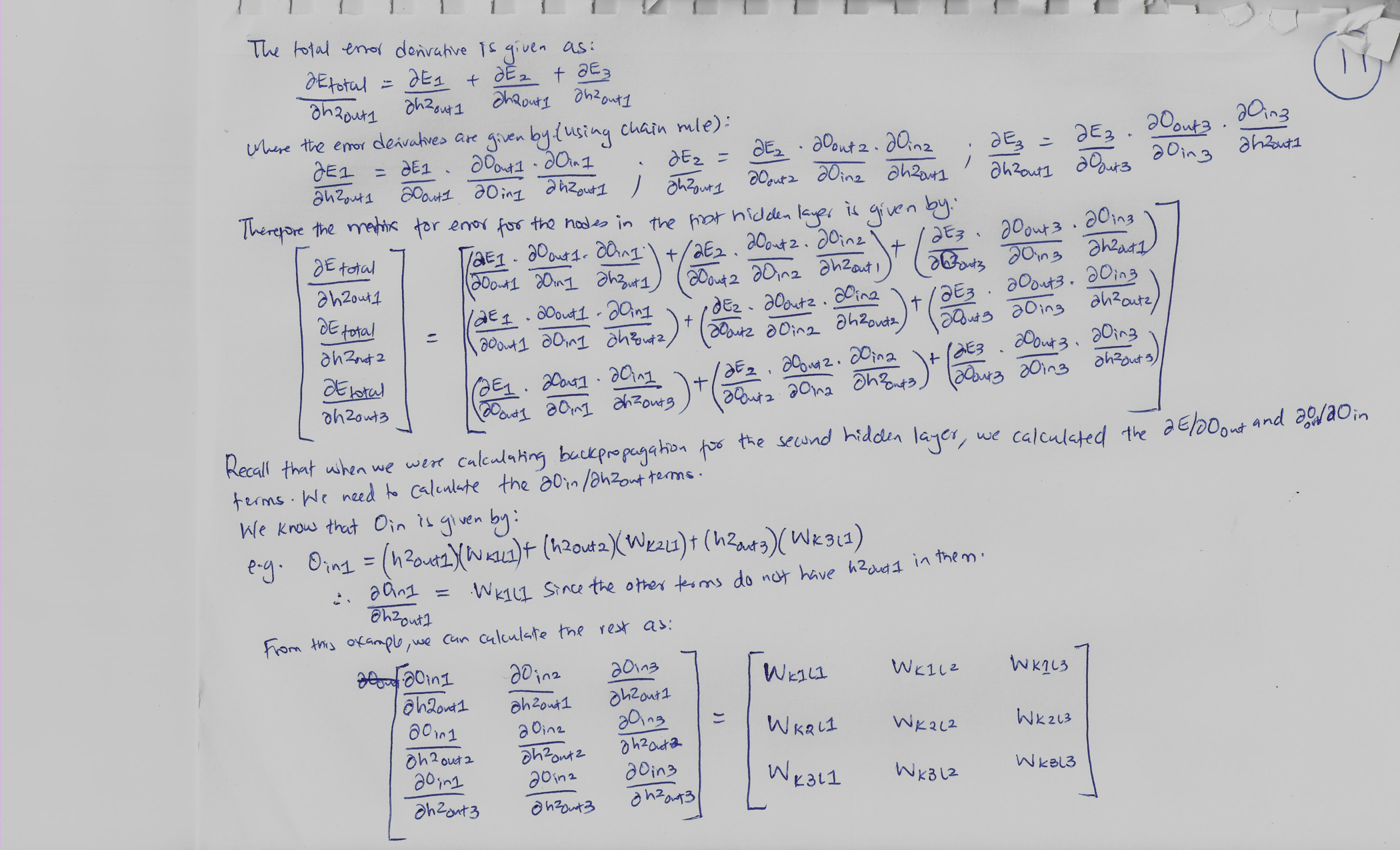

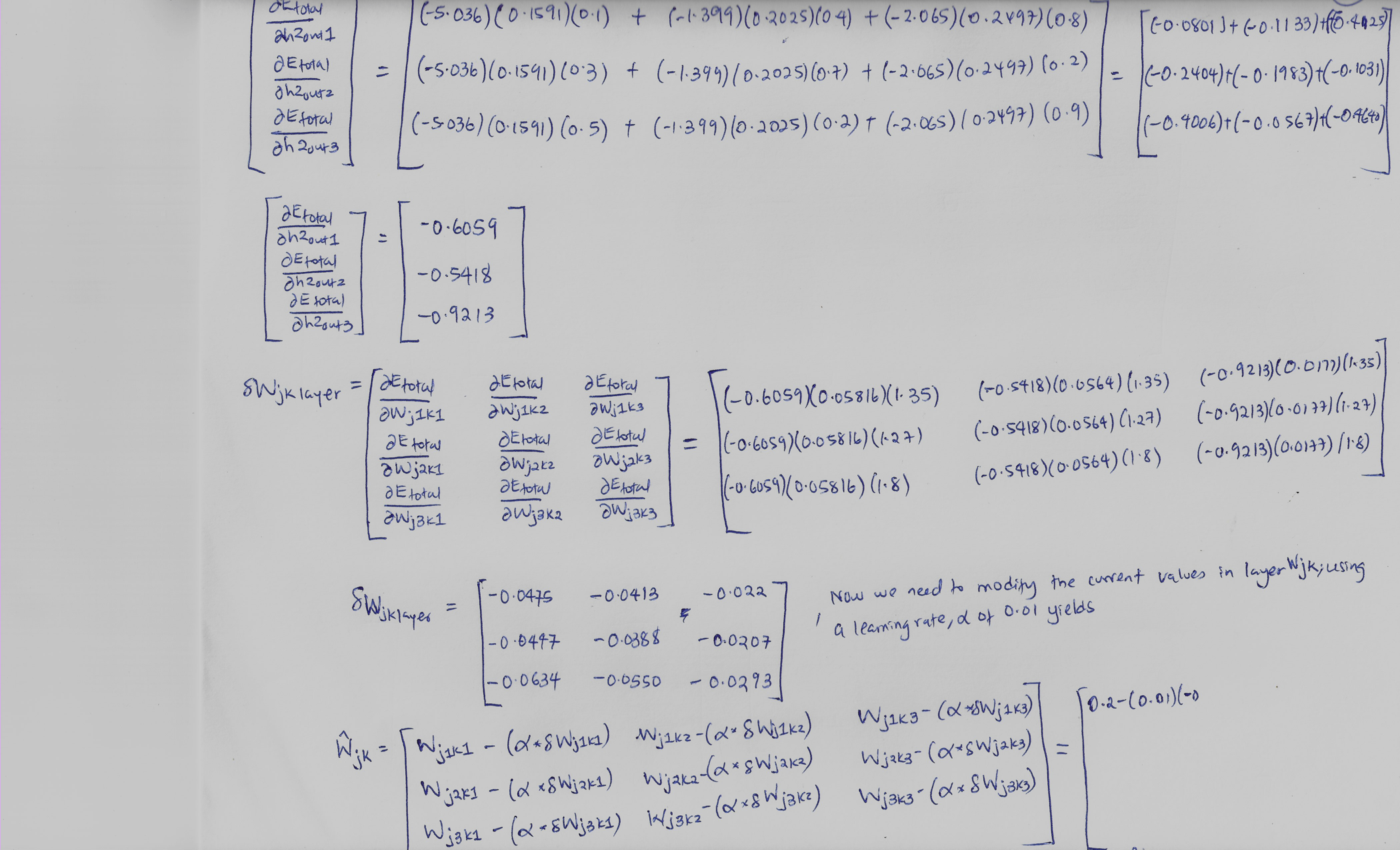

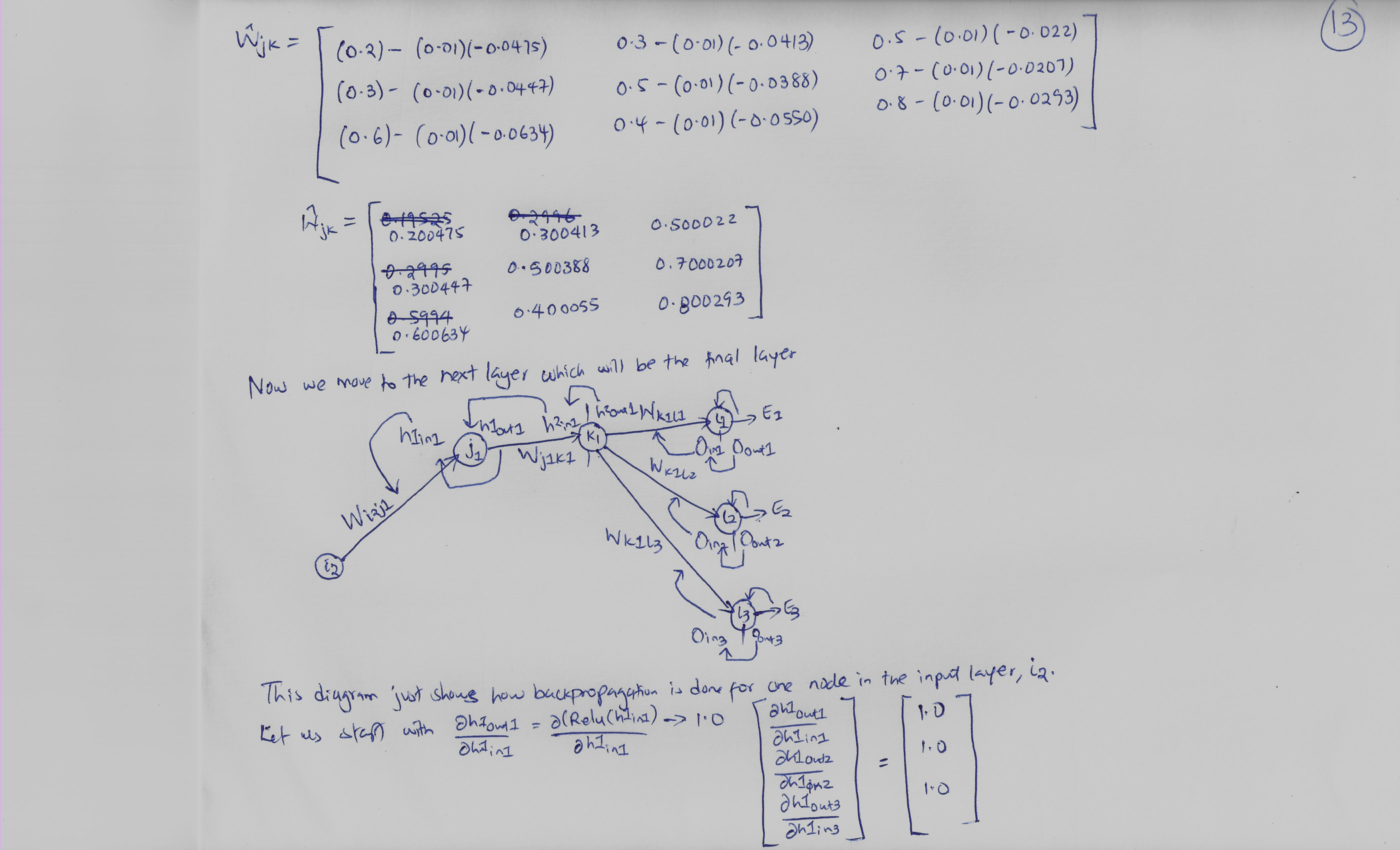

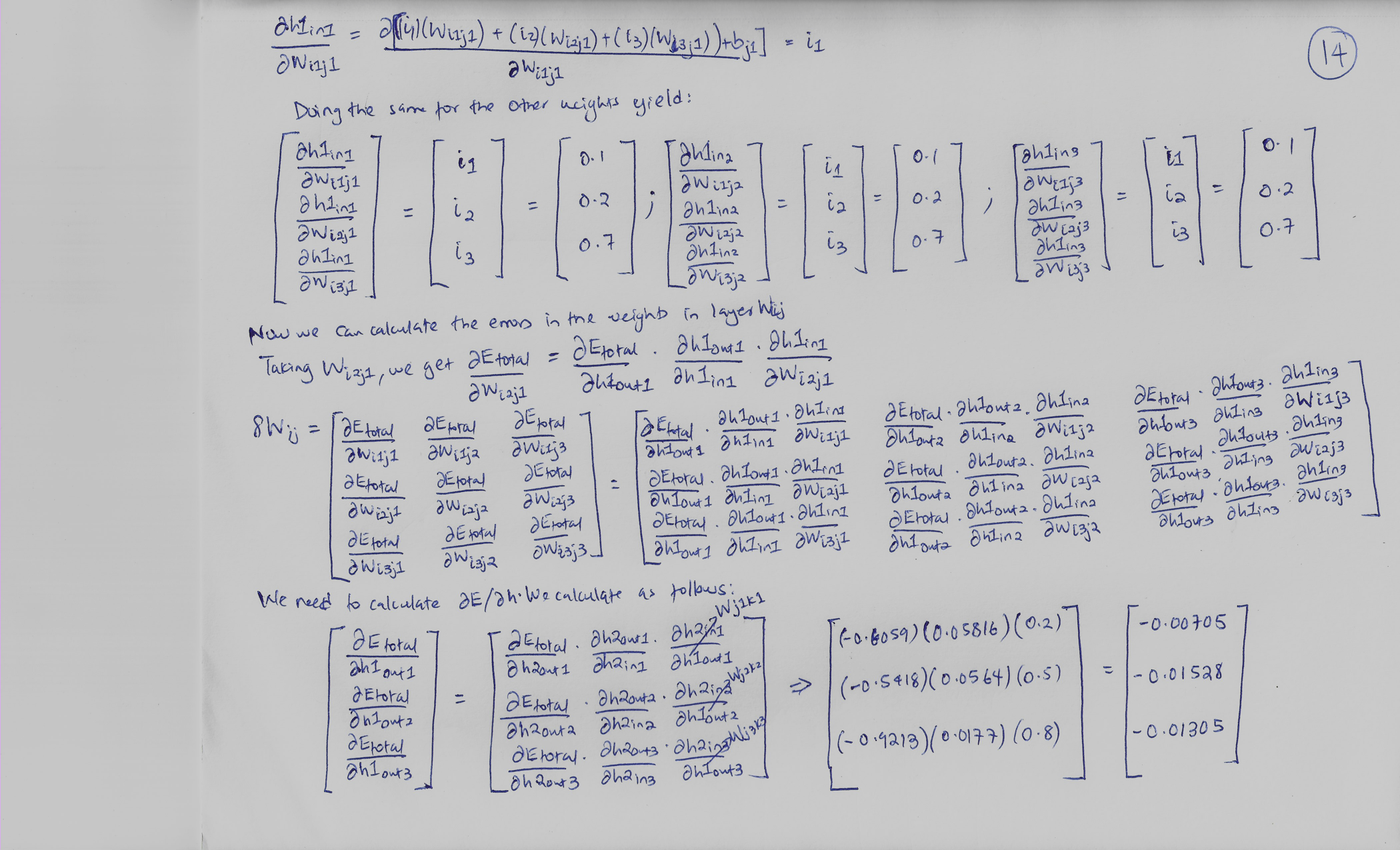

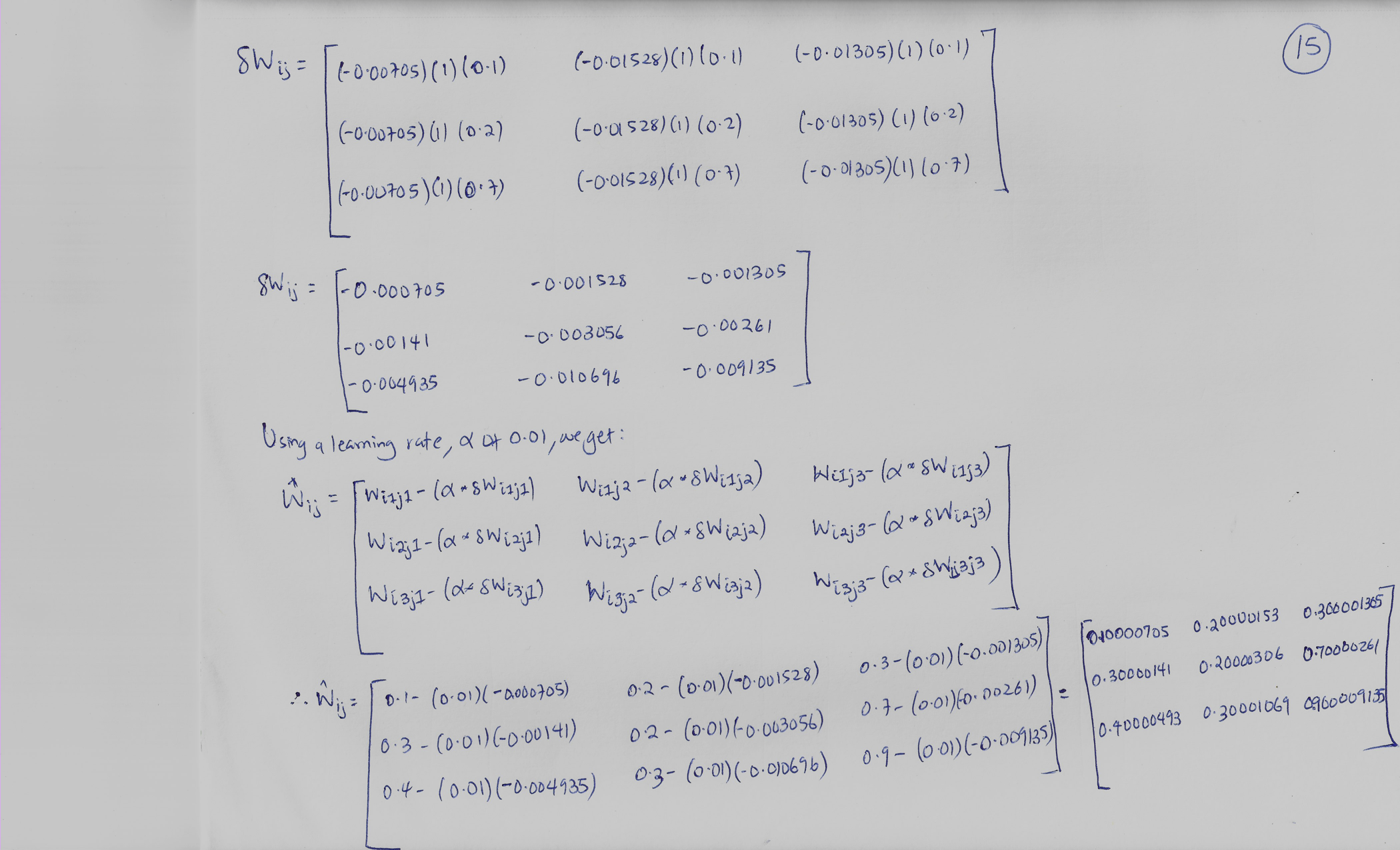

In this blog post, we will go through the full process of feedforward and backpropagation in Neural Networks. I will debunk the backpropagation mystery that most have accepted to be a black box. After completing the math, I will write code to calculate the same. This will be be particularly be helpful for beginners as they can understand what goes on behind the scenes, especially in backpropagation. Links will be added to assist those who want to dig deeper or want to have a better understanding. The Activation functions that are going to be used are the sigmoid function, Rectified Linear Unit (ReLu) and the Softmax function in the output layer. It is not mandatory to use different activations functions in each layer as is the case in this example.

I am looking for questions from the readers and I will be adding notes, as well as links to videos to this blog post as questions come to provide as much clarity as possible. I did the math by hand in order to understand it and be able to explain to anyone who might have any questions. It is beneficial, but not mandatory to have a Calculus background, as it will assist in understanding the Chain rule for differentiation.

Here is the Python code for this mathematical calculation:

References:

- https://medium.com/@14prakash/back-propagation-is-very-simple-who-made-it-complicated-97b794c97e5c